Background

In an ongoing series, I am evaluating my previous 6 month bets and writing new 6-month bets. Before, I do so, I must write a brief description of the state of affairs:

- Reasoning models were released by OpenAI (o1 and o1-mini)

- Sonnet 3.6 was released but Claude 3.5 Opus was not released

- LLAMA 3.x iterations were released were smaller and offered comparable performance as larger models

- DeepSeek open-sourced it’s reasoning models so we have a good idea about reasoning

Evaluating Previous Bets

Bet 1: Claude 3.5 Opus will be the LLM frontier model

Result: ❌ Lose

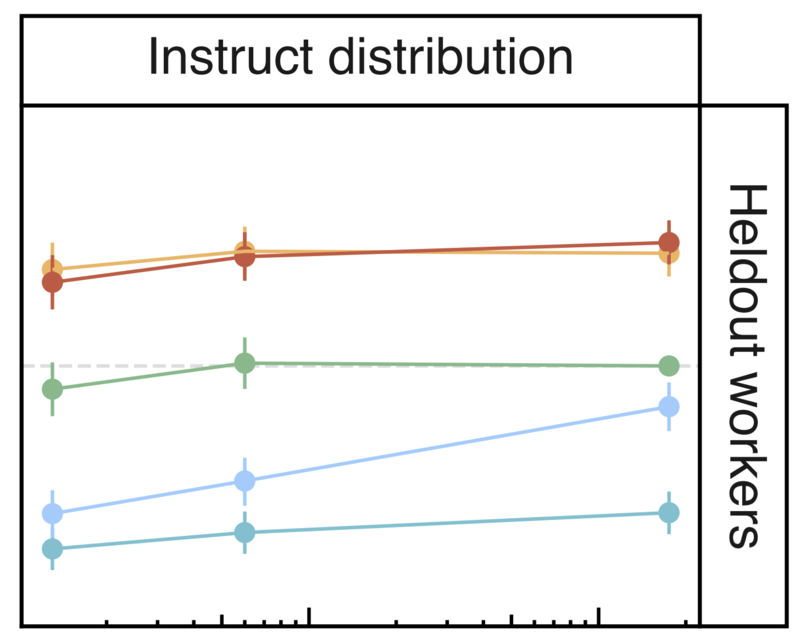

Claude 3.5 Opus was never released. There is a new regime where smaller models are doing similar/better than larger models. Part of this is smaller models are trained much longer than what scaling laws would suggest. Second reason, in my view, is better supervised finetuning data. Thirdly, it’s a new RL regime that allows models to do tasks that require more steps. The second reason suggests possible distillation from larger models to smaller models. The third step is reminiscent of Instruct-GPT which showed a model A outperforming a model B where B was 100x larger than A. This is my view has significantly altered our view of model capabilities as measured by benchmarks.

(Figure from “Training language models to follow instructions with human feedback” paper, showing win-rate against SFT 175B GPT3)

Bet 2: OpenAI will shift from ChatGPT product to an agent deployment

Result: ✅ Win

OpenAI will shift from ChatGPT product to an agent deployment i.e there will new post-training and/or scaffolding / “unhobling” that OpenAI will showcase in 6 months.

OpenAI showcased operator & o1 series. Dario Amodei is now speaking of virtual collaborator.

Bet 3: LLAMA3-400B multimodal release

Result: ✅ Win

If LLAMA3-400B releases, there will be a end-to-end finetuned multimodal (text/image guaranteed) possibly (text/image/audio) LLAMA3-400B.

Meta themselves did this except for image generation. I would still consider it a win.

Bet 4: OpenAI multimodal API restrictions

Result: ❌ Lose

OpenAI would not release end-to-end multimodal freely on their API.

OpenAI released their API freely. In hindsight, my bet 3 and 4 contradictory. If I believed there would a open-source end-to-end multimodal, it would make sense for OpenAI to release their API access and gain market share. Otherwise, potential customers would just use the open-source one.

New Bets

Bet 1: Useful mobile / laptop / desktop AI assistants are deployed

The economic value of small language models has risen since they will be able to do small tasks consistently. Moreover, there are many private tasks which I would not want to give API access to cloud-based AI. It makes sense that Gemini AI or Siri gets a boost and can do tasks like pull up apps and take actions in apps. This might mean that mobile apps would now need to be local AI-friendly to leverage utility.

Verifiability: Easy, since there will be an announcement

Bet 2: Benchmarks now measure tasks that take any human beings few minutes but have economic value

Previously, we saw benchmarks only test very specific intelligence (they can only be solved by expert humans but demonstrate low economic value). Now, there will be benchmarks measuring utility on generic tasks like paper filing or web lookups (tasks that are easy to do for a human but have economic value).

Verifiability: Easy since there will be new benchmarks

Bet 3: RL Research Developments

Following will be written about:

- There seems to be a RL limit of 8000 steps due to increasing answer lengths

- There will be system works trying to optimize the generation part of RL traces

- There will be works suggesting ideas for how to increase exploration in RL-training

- The effect of SFT data and prompting has in RL training steps

- New Scaling Law regime: How much compute should one allocate in pre-training vs post-training

Verifiability: Medium, there should be papers but this might spread out across multiple works.

Bet 4: Anthropic releases new model / framework for the virtual collaborator

Ok I am going to divide this bet into the following scenario:

- Pessimistic: They put out a cheap model for collaborating on daily tasks

- Medium: They release a model that achieves >40% on Frontier Math & Humanity’s Last Exam

- Optimistic: They release a truly superhuman creative genius limited to specific domains, that is arguably superhuman on specific reasoning tasks. Like, it can do human equivalent reasoning of few days on specific problems like number theory proofs or physics simulations.

Verifiability: Easy

End-date: End of July